The dynamic driving simulator (hexapod test bench), consists of a real semi-vehicle (VW Golf), which is mounted on a hydraulic motion platform. The windows of the driver’s cab, which can be moved in six degrees of freedom, are projected with a total of four projectors and an additional monitor for the rear-view mirror in order to offer test subjects a driving experience that is as realistic as possible. In addition, the interior of the vehicle has been modified so that a number of tablet computers cover the instrument cluster, the center console, and the passenger area. This allows novel interaction concepts to be implemented and evaluated.

Conducted studies in the hexapod:

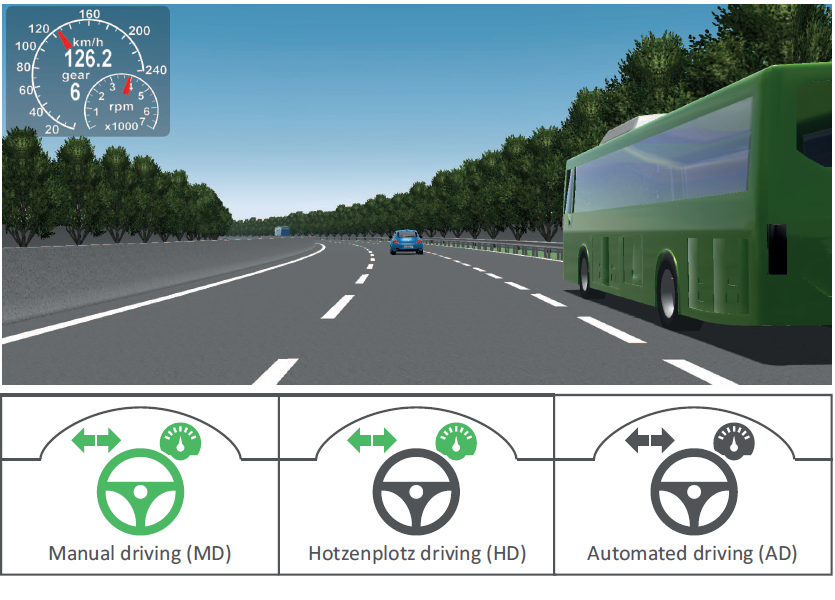

Driving Hotzenplotz

A Hybrid Interface for Vehicle Control Aiming to Maximize Pleasure in Highway Driving

We combined the advantages of manual (autonomy) and automated (increased safety) driving by developing the Hotzenplotz interface. It combines possibility-driven design with psychological user needs.

Traffic Augmentation

Vehicle sensors (radar/lidar) can see through the fog and thus drive the vehicle faster than human drivers. Do the occupants have more confidence in an automated vehicle driving through thick fog and overtaking when the road users are marked?

In UX We Trust

In the evolution of technical systems, freedom from error and early adoption play a major role in the market’s success and maintaining competitiveness. In the case of automated driving, we see that faulty systems are put into operation and users trust these systems, often without any restrictions. Trust and use are often associated with users’ experience of the driver-vehicle interfaces and interior design.

Workholistic

We investigated whether a hardware keyboard mounted on a steering wheel has the potential to provide a productive typing environment in automated vehicles.

Steer by Wifi

According to SAE J3016, passengers in a level ≥ 3 vehicle do not have to monitor the traffic continuously. As a result, non-relevant driving tasks (NDRTs) will increase. The idea: using e.g. Tablets for vehicle control in the case of takeover requests (TORs). The following questions were analyzed: Can we steer faster with a tablet? Does it achieve a similar steering precision and is it accepted by testers?

Let Me Finish before I Take Over

Can attentive UIs improve NDRT and TOR performance?

We evaluated whether or not the AUI features (1) precisely timing TOR notifications at task boundaries (between tasks rather than within task) and (2) presenting TOR notifications directly on the consumer device (device integrated) compared to a presentation on in-vehicle information systems only (IVIS only) lead to better TOR/NDRT performance, less stress, and higher trust/acceptance.

Why Do You Like To Drive Automated?

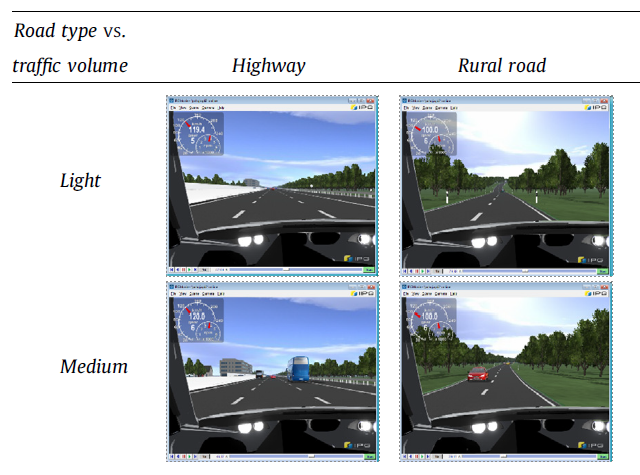

We present a need-centered development approach in two studies to create a positive user experience in highly/fully automated driving (AD). In the first study, we analyze the fulfillment of psychological needs in different contextual variations of driving scenarios, such as road type (highway, rural, urban road) or traffic volume (low, moderate, high). In the second, we compare manual and highly/fully automated driving in a simulator environment, suggesting cooperative control as a possible solution for enhancing AD experience.

AccidentTOR

In the area of AccidentTOR, we examined the following areas in a SAE Level 3 driving simulator:

- What could the mobile office of the future look like? How should the interfaces be structured in such a vehicle? Text comprehension was examined by varying the display modality (heads-up reading vs. auditory listening) and UI behavior in connection with takeover situations (attention awareness vs. no attention awareness).

- Comparing heads-up displays (HUDs) and auditory speech displays (ASDs) for productive NDRT engagement. The effectiveness of the NDRT displays was evaluated by eye-tracking measures and compared to workload measurements, self-assessments, and NDRT/takeover performance.

- Is there a difference in the takeover responses to non-driving tasks of subjects who have suffered a serious traffic accident compared to subjects who have not experienced an accident?

Olfactory Displays

Overreliance on technology is safety-critical and it is assumed that this could have been the main cause of severe accidents with automated vehicles. To ease the complex task of permanently monitoring vehicle behavior in the driving environment, researchers have proposed to implement reliability/uncertainty displays.

We suggest using olfactory displays as a potential solution to communicate system uncertainty.